Univ.-Prof.in Dr.in Martina Mara (JKU Linz)

Univ.-Prof.in Dr.in Ivona Brandić (TU Wien)

Project Description

Background

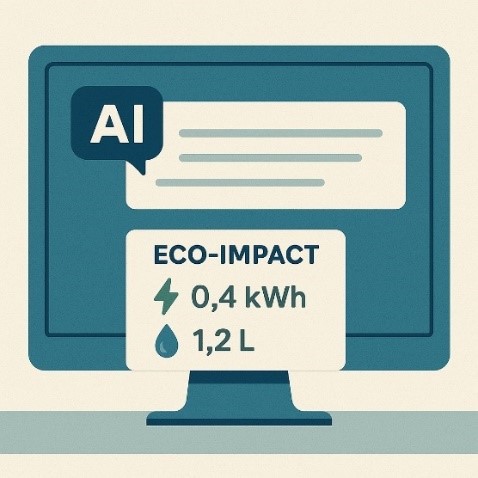

Large Language Models (LLMs) like ChatGPT, Gemini, or DeepSeek are now used by millions of people every day. This creates clear benefits but also a growing environmental footprint: every query consumes electricity and water for cooling the data centers, and the sum of billions of interactions adds up. Most efforts to make AI “greener” focus on the back end: more efficient models, better data centers, smarter scheduling. Much less attention has been paid to the user side: how we write prompts, how interfaces guide our behavior, and what people understand about the resource cost of their actions. Early work suggests that prompt design can meaningfully change how much computation a task needs, and that eco-feedback, by showing people the impact of their actions, can motivate more sustainable directions. If billions of daily interactions drive demand, even tiny efficiency gains per session could add up globally. HAI4S addresses this issue from a user-facing perspective on computational sustainability.

© LIT Robopsychology Lab

Vision

The PhD candidate working on the HAI4S project will make a significant scientific contribution to advancing sustainable AI by developing user-centered strategies that reduce the computational demand of LLMs while maintaining—or ideally even improving—user experience. Drawing on theories and methods from Psychology, Human-Computer Interaction, Artificial Intelligence, and Interface Design, the project will deliver empirically grounded insights into effects of “green prompting” and eco-focused Explainable AI (XAI). A central goal is to empower users to adapt their interaction patterns by equipping them with the skills and tools to engage more consciously with LLMs. An important component will be the development of effective ways to communicate and teach sustainable interaction with AI to non-expert users. Ideally, real-time eco-explanations will allow users to see when their prompts become more energy-efficient, fostering a sense of self-efficacy and impact. In doing so, the project seeks to place human agency at the center of computational sustainability, contributing findings that align environmental goals with real-world user needs.

© LIT Robopsychology Lab

Research aims

Core research aims of HAI4S may include the following, which the PhD candidate will refine and prioritize with Prof. Mara and a second supervisor at TU Wien.

- Eco-feedback and energy-aware XAI. Design and evaluate LLM interface elements such as eco-feedback dashboards and embedded explanations about resource use that surface real-time energy/water implications of human-AI interactions in understandable ways. Assess effects on acceptance, user experience, and behavioral intentions.

- Measurement and impact. Develop and apply robust estimation and measurement pipelines linking user behavior to resource use of LLMs. Move beyond proxies (tokens/latency) toward direct measurements where feasible, including experiments with locally hosted models or controlled deployments. Observe whether changes at the interaction level scale to meaningful savings, while accounting for potential rebound effects.

- Practice-oriented guidance. Synthesize results into actionable design guidelines and educational materials for sustainable LLM use in personal or educational settings.

© LIT Robopsychology Lab

Methods

The dissertation within the HAI4S project will be cumulative, i.e. built around 3–5 high-quality journal or top-tier conference publications at relevant venues in HCI, Psychology, and AI, and will combine psychological rigor with hands-on technical work.

The concrete research plan will be developed jointly with the supervision team. As a starting point, it could could be structured along the following lines:

- Foundations. Start with a scoping literature review to map out green prompting, eco-feedback for digital systems, and metrics for resource use. From this, derive a clear conceptual model and initial hypotheses about how interface features and usage strategies may influence efficiency and user experience.

- Prototyping and instrumentation. Develop lightweight LLM interface prototypes that make it possible to:

- Operationalize different prompting strategies;

- implement eco-feedback monitors (e.g., energy/water use signals, comparative footprints);

- log interaction data securely and ethically for analysis.

Where possible, supplement estimates with direct measurements (e.g., metering for local models) to evaluate impacts observed behavioral changes on real resource use. - User studies (quantitative and qualitative). Conduct a program of mixed-methods user research, ranging from think-aloud interaction sessions and qualitative user interviews to controlled randomized experiments and online field studies. Typical measures will include self-report data related to user experience, needs fulfillment, acceptance, and behavioral intentions, alongside technical indicators of time, task success, and computational demand. Study designs will remain adaptable so the candidate can refine hypotheses, compare alternative design patterns, and explore different user populations.

- Analysis and synthesis. Use qualitative analyses and appropriate statistical methods for quantitative data to test hypotheses and extract original and relevant insights. Integrate findings across studies into a coherent framework, educational materials, and practical design guidelines that others can reuse.

Where feasible, preregister quantitative key studies; share materials, code, and anonymized data; and document measurement procedures so results are transparent and replicable.

About the Hosting Institute

The Robopsychology Lab at the Linz Institute of Technology (LIT), Johannes Kepler University Linz, investigates how people experience intelligent machines—and how new technologies can be designed to meet the needs of diverse user groups.

Our work blends theories and methods from Psychology with current questions in Human-AI and Human-Robot Interaction. Among other things, we study how artificial human-likeness and social cues of AI systems shape user perceptions and behaviour; when (and how) explainability and understandability foster well-calibrated trust and adequate decision-making in human-machine collaboration; how to strengthen AI literacy in the broader public; and how AI-based technologies affect users’ sense of autonomy, competence, and creative self-efficacy.

The Robopsychology Lab works with a wide range of empirical methods: in addition to quantitative research designs such as large-scale user studies and controlled experiments, we also use qualitative approaches such as focus groups, as well as participatory formats including citizen science projects and co-design workshops.

A special focus of the Robopsychology Lab is on science communication. The team realizes award-winning projects that creatively communicate the psychological, social and ecological implications of AI and robotics to the general public, curates research-based exhibitions and develops interactive installations that make scientific content relatable. The recent project “Songs about AI,” within which the Robopsychology Lab also launched the rap song “Hi, AI (134 TWh),” earned the team a Kepler Award for Excellence in Science Communication.

Located in the LIT Open Innovation Center on JKU’s campus in Linz, the Robopsychology Lab benefits from an open, transdisciplinary ecosystem and regular collaboration with partners from civil society, industry, and neighboring academic disciplines.

For the prospective doctoral researcher in the HAI4S PhD project, the Robopsychology Lab offers a dedicated workspace, computing infrastructure, a fully equipped user-study lab, and software licenses as needed.

More information: www.jku.at/lit/robopsychology

About the Principal Investigator / PhD Supervisor

The HAI4S PhD project will be conducted under the supervision of Prof. Dr. Martina Mara.

Martina is a Full Professor of Psychology of Artificial Intelligence & Robotics and the Head of the LIT Robopsychology Lab at JKU Linz She completed her PhD in Psychology at the University of Koblenz-Landau (2014), focusing on user acceptance of highly human-like robots, and received her habilitation (venia docendi) in Psychology from the University of Nuremberg (2022). Trained as an empirical psychologist with an interdisciplinary outlook, her work examines how people perceive, trust, and collaborate with intelligent systems—and how system design can support human autonomy, competence, and wellbeing.

Before joining JKU in 2018, Martina worked for many years outside academia, including at Ars Electronica Futurelab, where she collaborated with international partners—among others, tech companies across Europe and Japan—at the interface of technology, research, and the arts.

She has served as principal investigator on several large, collaborative research projects and publishes regularly in leading psychology and HCI venues (e.g., Computers in Human Behavior, International Journal of Human-Computer Interaction, CHI Conference on Human Factors in Computing Systems). She also serves as General Chair of the CHIWork 2026 Conference, taking place in Linz.

In teaching, Martina contributes across curricula in Artificial Intelligence, Psychology, Computer Science, and Art x Science. Her courses cover human-centered and sustainable AI, media & technology psychology, and transdisciplinary research methods, reaching hundreds of students each term.

Beyond research and teaching, she is passionate about science communication, regularly giving public talks or developing interactive exhibitions on her lab’s topics. As an active voice in public discourse on human-centered AI, she has received recognitions including the Vienna Women’s Prize for “Digitalization,” a Futurezone Award for “Women in Tech,” and the Käthe Leichter Prize for outstanding contributions to women’s and gender research from the Austrian Federal Ministry for Digital and Economic Affairs.

Martina actively advances equal gender opportunities in AI. She co-founded the Austrian Initiative Digitalisierung Chancengerecht (IDC), which promotes gender-fair digitalization across public policy, research, industry, and media. She also serves as a mentor for early-career women scientists through university programs and professional networks.

As a supervisor, Martina is supportive, structured, and impact-oriented. She emphasizes rigorous empirical methods (e.g., thoughtful study design, preregistration, sharing of materials where feasible) and encourages professional growth through conference participation, grant writing, and teaching practice. Doctoral researchers can expect clear milestones, regular and constructive feedback, and an inclusive, interdisciplinary research culture.